A plenary discussion at the AGILE 2010 Conference in Guimarães has led to the Association’s backing of an effort to develop a rating scheme for publication outlets in Geographic Information Science. A short paper at AGILE 2011 sketched the idea and led to constructive discussions and the backing from the AGILE Council for a broader international project. The initiative began officially in early 2012, led by the University of Münster (Werner Kuhn and Christoph Brox) with assistance from Karen Kemp (University of Southern California). A paper discussing this Initiative to a greater length was presented at AGILE 2012 in Avignon. The results of the initiative were presented and discussed at AGILE 2013 in Leuven. Follow-up activities are currently being planned, with a tendency toward seeking consensus on rating criteria rather than ratings.

The results of the survey are summarized as PDF document and can be downloaded here.

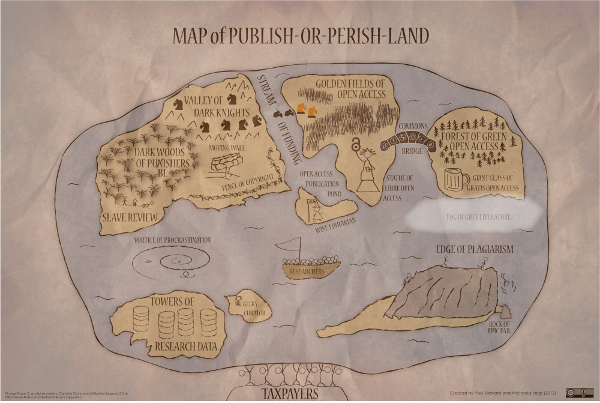

The visualization was created by Paul Vierkant and Michaela Voigt using Adobe Illustrator. The map was inspired by an XKCD comic dating from 2007. [Fn 33] Except where otherwise noted, this work is licensed under http://creativecommons.org/licenses/by/3.0/

The Problem

Researchers in our field often find it difficult to argue with established disciplines like Geography, Statistics, or Computer Science for the strengths and weaknesses of “our” journals and conferences. Yet, this is becoming more and more important in the context of external evaluations of research labs or individual scientists, where scientific output is sometimes undervalued, potentially leading to financial and structural penalties. Reasons for the difficulties include the dominance of biased indices like Thomson-Reuters’, the relative importance of conferences in our field, the different criteria used in geography and computer science, and the variable meaning of “high impact factors”. In addition to projecting a clear image of publication quality levels to outsiders, it is also important to help our own junior researchers target their publishing efforts and to raise general awareness about quality differences. Existing analyses of publication outlets are limited to journals and lack consensus and maintenance processes.

The Idea

This AGILE Initiative targets a simple, transparent, community-driven and maintainable guide to quality levels of journals and conferences. We decided to limit the outlets rated to those with a declared focus on Geographic Information Science (including all its naming varieties as well as spatial analysis) and to define mappings to journals and conferences in other fields. The goal is not to rank outlets, only to rate them, by just three categories. The transparency of the chosen criteria as well as of the whole rating process is considered essential. A Delphi survey meets these requirements ideally.

The Survey

The Delphi method of consensus building has been used in many different policy making domains to help choose amongst competing priorities. Within our field, the technique was used in the mid-90s to develop consensus on the contents of a post-graduate GIScience curriculum for Europe more recently to evaluate GIScience journals. Its basic approach is simple: an iterative series of surveys with a single group of respondents evolves from open-ended opinion solicitation to structured voting on a limited number of options.

The objectives of the first survey in this initiative are to:

- Identify journals and refereed conference proceedings with a declared focus on GIScience (by any name);

- Rate these outlets into three categories (A, B, and C);

- Collect information about other publication outlets in which GIScience research is published, but which have their focus outside GIScience.

The survey will start during the Avignon conference, and can be accessed here. Any GIScience researcher who has a doctorate and has published in at least five different journals with broad international readership can participate, whether they are AGILE members or not. Respondents will be permitted to register once they affirm that they meet these requirements. The list of respondents (but of course not the individual responses) will be made public at the end of the survey process. We plan a second Delphi survey in 2013 in which the established GIScience outlet ratings will be mapped to outlets in other fields. A process for revision and maintenance of the ratings and mappings will also be developed.

The overall goal is to produce a GIScience journal and conference rating that is solidly founded on community input, endorsed by AGILE and other organizations, and related to publication outlets outside our field.

Council: Martin Raubal, Lead: Werner Kuhn, Karen Kemp, Christoph Brox, Member 1: Universität Münster, Other Members: ETH-Zürich